Do you have a great idea for an app, but you don’t have any idea how to implement it?

Have you developed your first app using low-cost developers, and now you realize that the design is inadequate for the next stage of your product?

Do you need someone with many years of senior technical leadership to help you meet with investors, help you evaluate vendors, help you do technical roadmaps and design, and maybe to even code up your first app?

Do you want someone when you need them, and not have to be tied to long-term contracts? Do you need to be flexible, and only pay for what you need?

A partial list of past clients includes:

- Mosiak (real estate)

- Cohere (wellness services)

- WeAreRobyn (female health services)

- Arnie (ESG Investing)

- XP Investments (trading)

- Xureal (gaming)

- Havas Health (healthcare)

- Story2 (education

- Blossom (coaching)

- Kalpsys (Zocdoc competitor)

- Purple Ant (Insuretech and IoT)

- Golden Pear (legal financing)

- Blue Apron (food prep)

- OneEleven (financial wellness)

- Namely (payroll)

- and more …

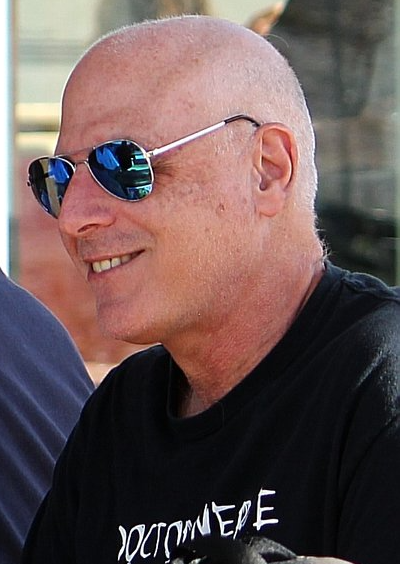

CTO as a Service (CaaS) is run by Marc Adler

You only pay for the services you use

A basic tenet about using Cloud Computing is that you pay for what you use. If you just need a little bit of computing power, then you only pay for the actual services that you use. If you are a business whose busy period comes several times a year, then you pay for a lot of computing power only during those times, and the rest of the year, you only pay to “keep the lights on”.

Just like working with Amazon Web Services or Microsoft Azure or Google Compute Cloud, you only pay CTO as a Service for the time you use us. Unlike engaging a major IT consulting firm, there are no long-term contracts that you get locked into. If you need us to work with us for 20 hours a week, then that’s what you pay for. If you want to keep us on a monthly retainer to meet with your development team once a week, that’s all you pay for. If you need to bring us to a meeting with investors, then you just pay us for the meeting. And, if you want to engage us more, then you just have to pay for those increased hours. In this way, you can think of CaaS as you think of your lawyer or accountant.

Below is a video of a roundtable that I did with CTO Craft on February 2, 2023, in which I describe my life as a fractional CTO.

Contact CaaS

If you think that my background can help your organization, feel free to contact me at info@ctoasaservice.org.

(Site Header photo: Futurama Exhibit, 1964 World’s Fair)